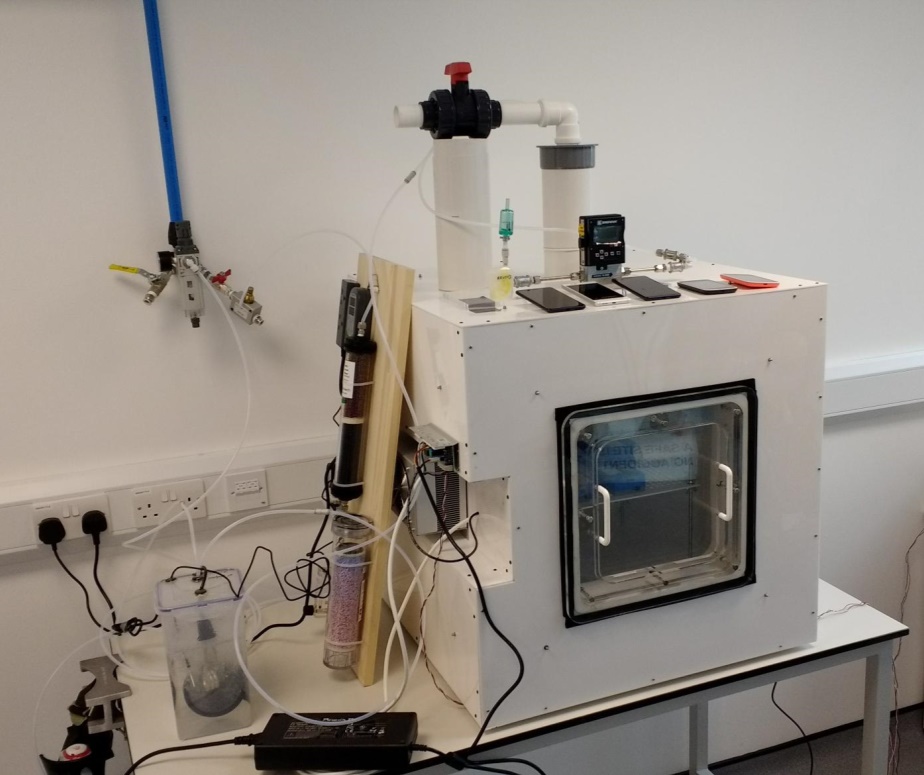

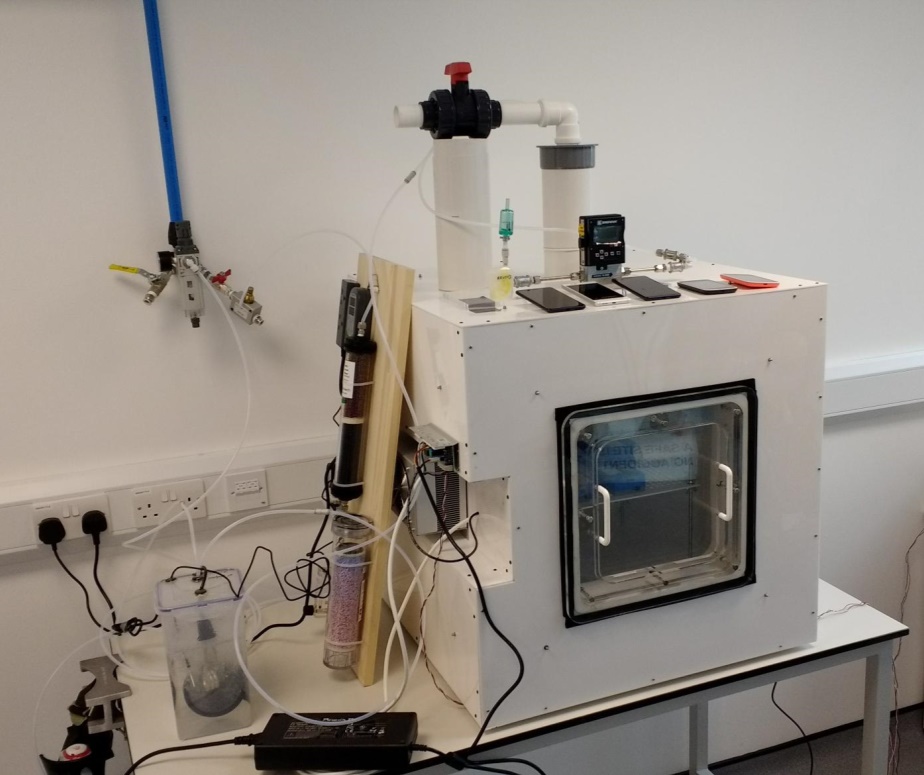

As part of the Pollution Guardian project, our collaborators, the University of Surrey Global Centre for Clean Air Research (GCARE) planned to develop an air pollution test chamber for affordable sensor devices to be evaluated against high quality laboratory grade test measuring instruments.

The objective for the chamber was to be able to set up stable, predictable air pollution and environmental conditions to enable the replication of field conditions from ANYWHERE in the world.

In its first iteration, the chamber, subsequently branded “Envilution”, was capable of controlling:

- Chamber air temperature

- Humidity, including pre-filtering to create “zero air” as a baseline (dried and filtered air)

- Particulate Matter (PM) levels, with options to create particulates from a variety of sources

- Nitrogen Dioxide, NO2, gas concentration

By the end of the second quarter of the project, the chamber was ready to start testing with our Pollution Guardian prototypes.

Figure 1: Envilution air pollution test chamber created by the University of Surrey

The chamber conditions: temperature, relative humidity, particulate matter level and NO2 concentration were sampled from a port in the chamber using the same high quality instrumentation mentioned in the previous blog.

In our testing, we planned on exploring the behaviour of the prototype sensor units against changes in the levels of temperature, humidity, particulate matter and NO2 gas concentrations. Whilst some of these tests were more straightforward to set up, others turned out to be more tricky e.g. humidity testing.

Mostly when weather websites predict humidity levels, they actually refer to the “relative humidity”. This is a measure between 0-100% of how much humidity the air is capable of carrying, relative to the air temperature and pressure. As you get toward 100%, you can get a lot of condensation forming on surfaces and in warm conditions struggle to keep cool by sweating.

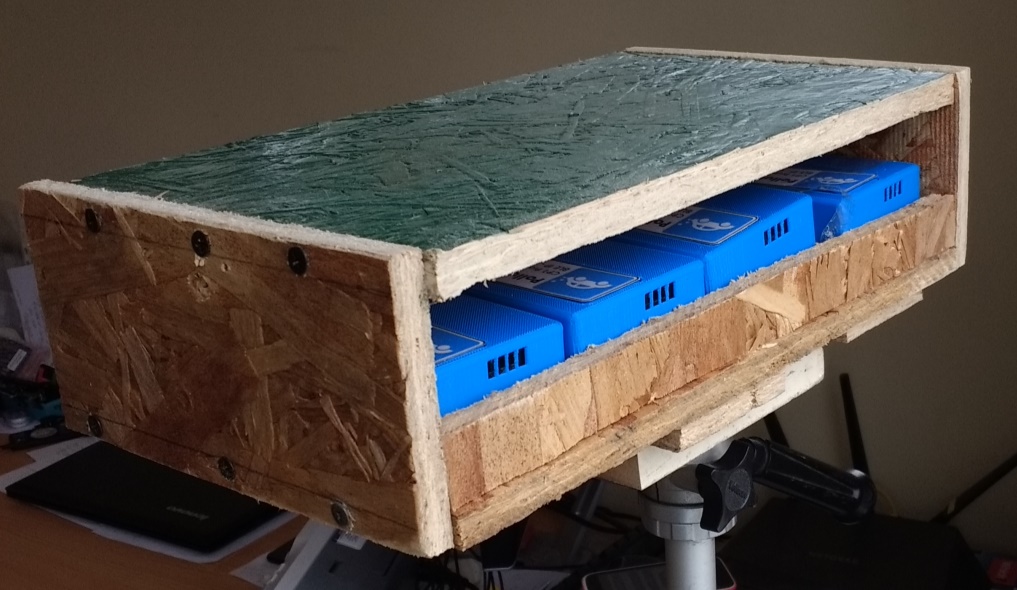

In our lab tests, we aimed to see how our sensors responded overall to the relative humidity in the range of 10-85%. The initial approach was to use an aquarium to generate the humidity and mix this with “zero air” (cleaned and dried) to achieve a target relative humidity.

Figure 2: Zero air combining with water vapour from the aquarium

As we discovered, it was quite hard when starting off with zero air to turn this into high humidity air without also having condensation issues within the ducting leading to the test chamber. The GCARE team were however quite resourceful when coming to practical techniques, and we managed to explore the other parts of the humidity range using a combination of a nebuliser to create small water vapour particles and the higher end range of humidity through rearrangement of the sets of connections into the chamber.

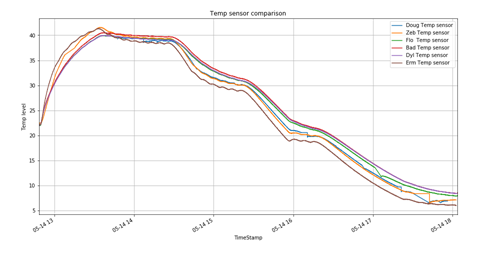

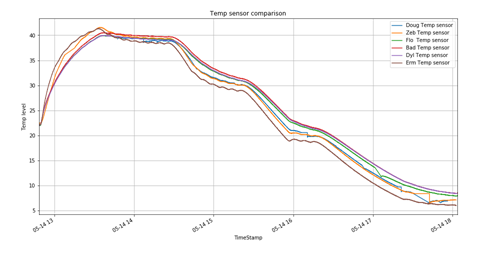

I will tell a bit more about the performance of our sensors in the chamber in subsequent blogs whilst briefly mentioning the results from our temperature sensor during a temperature test.

Figure 3: CP1 prototype temperature responses during a lab heating/cooling experiment

The CP1 prototype units’ temperature sensor responses were generally well correlated with one another, but slightly higher than the chamber temperature recording. This was attributable to the internal heat dissipation of the prototype, when in operational mode.